Author: Dave Smith

Viewers: 1,808

Last month viewers: 9

Categories: PHP Tutorials, PHP Performance, Sponsored

While there are many facets to scalability, load testing is how you prove your concept in development instead of crossing your fingers and hoping everything works out in production.

Learn some of the basic concepts behind load testing, like bottlenecks, scaling, concurrent virtual users and instantaneous peak.

Read this article learn how to perform proper Web site load testing with the WAPT tool.

Introduction

Bottlenecks

Vertical and Horizontal Scaling

A Lesson Learned

Development to Production

Virtual Users

Load Agents

The Path to Performance

Available Modules

Conclusion

Introduction

You, or your client, has this great idea that will change how the world currently works. The concept moves from proof to development and finally to production on the web and everyone involved celebrates their future success.

Your service, product, or whatever you are offering steadily becomes more and more popular, more celebration as hits to your site grows, until you get the call...

The Web site is running a little bit sluggish, it starts taking several seconds for pages to load until eventually more and more users time out and then the whole thing goes down as the server crashes.

In this article we will take a look into understanding scalability which is the ability for our web applications to handle increasing work loads. What are some of the issues we will face and options available to manage them.

I will be using the WAPT Pro tool provided by SoftLogica for web application performance testing to simulate real world visitors and record performance data, known as load testing. The fully functional commercial tool can be used as a free trial for 30 days, however you will be limited to 20 virtual users instead of 2,000 available in the fully registered product. It has capacity to test up to 10,000 virtual users with the x64 Load Engine.

Bottlenecks

If you are not familiar with the term bottleneck, it literally describes how liquid backs up inside a bottle as it passes through the thinner neck. For our purposes, it is where the flow of web traffic will start to back up as processes require more time or resources to complete a request.

What happens when traffic encounters a bottleneck depends primarily on how much traffic arrives at the same time, known as concurrent users, how quickly the request can be handled with available resources and how much traffic is heading down the pipeline.

Normally traffic will arrive at a bottleneck in manageable numbers where the available resources can clear out the requests and be available for the next wave of traffic heading its way.

In a situation where more concurrent users arrive than resources can quickly manage, the traffic heading down the pipeline to the bottleneck crashes into the waiting traffic and starts to back up.

The problem even becomes worse as resources become scarce for other processes until a critical mass is reached and everything crashes.

It is important to understand what a concurrent user is. We have a tendency to look at our Web statistics as the number of page hits within a specified period of time. Even if you are looking at the number of hits per minute, processes can clear out requests in milliseconds so a large amount of traffic can be managed as long as it arrives nicely spread out over that minute.

A concurrent user is traffic that arrives at the same time which in turn makes demands on resources at the same time.

To prevent traffic from backing up and escalating to critical mass, we have to reduce the amount of time it takes to process a request by widening or eliminating a bottleneck, or by throwing more resources at it to spread out the work load.

We need solutions in place capable of managing the most concurrent users we can expect, known as instantaneous peak. We call this scaling.

Vertical and Horizontal Scaling

Vertical scaling adds resources to a single unit, like more memory, more processors, faster processors and greater storage. From my viewpoint as a developer, this also includes reducing the load on resources through good coding practices and software solutions.

Horizontal scaling adds resources by adding more units, for example adding more servers to a data center. As long as the software can take advantage of this distributed model, it can share resources across the entire data center.

One horizontal scaling technique called high availability, is to cluster hardware so that it will contain multiple instances of your project which allows servers to share the load, increasing scalability. A high availability system can handle requests continuously with minimal or no down time. A scalable system can provide high availability if it can handle the increasing load using more resources without needing to change its architecture.

In the past, there was always a balancing act between the limitations yet cost effectiveness of vertical scaling versus the greater capacity and greater cost of horizontal scaling. Today, these issues are becoming less of a concern as we have greater access to cloud platforms which will automatically scale both vertically and horizontally depending on the needs for any given moment.

I do want to step away from hardware solutions and take a brief look at some software solution examples.

One of the most common bottlenecks we face is data storage and retrieval with databases. A RDMS (Relational Database Management System) database like MySQL can be resource intensive, especially with complicated queries that require multiple passes across multiple tables to retrieve the data.

Properly indexing a table can eliminate a lot of problems, however you must remember that indexes do use more resources in the form of storage space.

Large data sets can be partitioned, which is grouping the data into smaller partitions of a table, however MySQL has deprecated the generic partitioning handler which will be removed in version 8. You will need to migrate all your data to a storage engine, like INNODB, which has native support for partitions.

Results from resource intensive queries can be cached using memcached, which allows the same result to be delivered to multiple users from a server side cache, without having to access the database multiple times.

If you do not need a relational database model, you can use NoSQL databases which are designed to be highly scalable.

Another common bottleneck is complicated mathematical equations, especially with data mining. As the need arose to process more and more data into results which could be understood and used, algorithms where developed as mathematical short cuts to arrive at the same results.

A Lesson Learned

As I contemplated a good working example to highlight the need for developing with scalability in mind, I could not think of anything better than the real world healthcare.gov debacle.

In case you are not familiar with the background, the United States Government passed into law a healthcare reform act, better known as Obamacare, named after President Obama. Part of this law required every American citizen to enroll into the system through the federal government, primarily using the healthcare.gov Web site.

There was a large marketing campaign to ensure every American knew that they where required to enroll and a countdown to the launch of healthcare.gov. It costed $800 million, yes I said million, US dollars to develop and was the pride of the current administration. As the clock ticked down to zero and the site was officially launched, it crashed.

While I am sure the developers tested everything against loads, they did not appear to take into account instantaneous peaks. The government had to consult with industry leaders, implement scalable solutions and with $2.1 billion, yep billion, dollars now invested they where finally able to implement the law. While they did eventually recover, enrollment extensions had to be extended and their reputation was permanently damaged.

I understand that most of us will never experience this kind of load at launch, however it is important to consider that, in most cases, we do want to become more and more popular and must develop with scalability in mind.

Development to Production

We should be testing our applications against instantaneous peak while we are in development. The question is, what is our instantaneous peak level?

To be honest, it is our best guess. We can help make that guess a little more informed by doing some math. The first thing we need to know is how many hits per day our bottlenecks will reasonably receive. We won't have any hard data in development, so lets choose a number which is a little higher than our expectations on a busy day, let us say, 4,000 hits a day.

Next we need to determine how many hours a day are peak hours, in other words, how many hours is the bulk of traffic on our site. For this example we will say that most of the traffic arrives within a 10 hour period.

We will use these 2 figures to determine our average visitors per hour, which is 4,000 (visits per day) divided by 10 (peak hours per day). 4,000 / 10 = 400 average visitors per hour.

We need to turn that into concurrent users, so we need to estimate the length of time a user spends on the site. With this figure we will be able to calculate the likelihood of requests arriving at the same time. We will say that, on average, a visitor stays on the site for 10 minutes.

We divide our average visitors per hour (Vh) by our visitor duration (Vd) and we have our average concurrent users (Cu). There are 60 minutes in an hour, so the formula will be...

Cu = Vh / ( 60 / Vd )

400 / ( 60 / 10 ) = 66.67 = 67 rounded up.

We have estimated that our typical peak workload will be 67 concurrent users. We still need to determine the instantaneous peak (P) that we have to be prepared to deal with.

This is subjective, based on how important it is that a user experiences no delays. If it is okay for a few users to experience an occasional delay then we would use an importance (I) factor of 3. If it is really important that no traffic is delayed then we would use factor of 6.

In our case we will say it is somewhat important and use a factor of 4.5, right down the middle. 67 concurrent users times a factor of 4.5 is 302.5, or 300 users for an instantaneous peak. Our complete formula to determine how many users we need to scale to, our instantaneous peak is...

P = Vh / ( 60 / Vd ) * I

400 / ( 60 / 10 ) * 4.5 = 300

Once we have actually moved into production we will have accurate data. If we have developed and scaled our systems to handle a 300 instantaneous peak, then it is important to continue to monitor the production stats. The formula is the same to determine our real time instantaneous peak and will allow us to increase scale as we approach the maximum levels we developed for.

Virtual Users

If you want your load test results to be useful, then you must accurately simulate real users and how they interact with your site. Just sending some traffic to your site and being satisfied that there where no network errors is not enough. Refer to the lessons learned section earlier in this article.

I will been using the WAPT tool from SoftLogica which allows me to easily generate virtual users and record typical user interactions across an entire website.

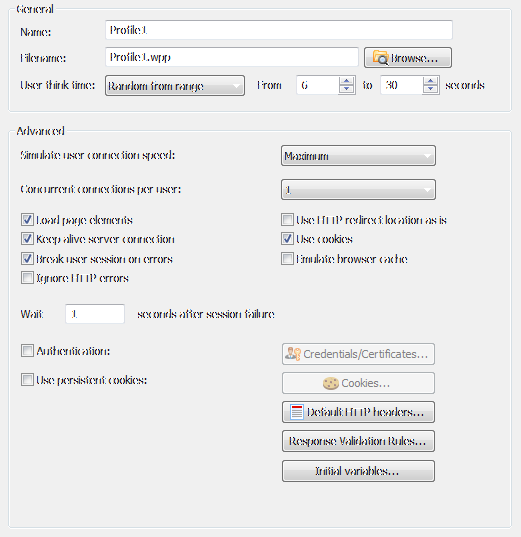

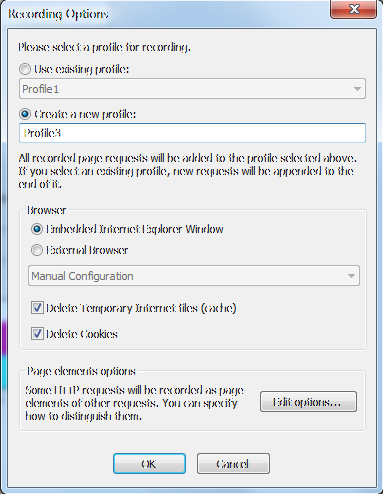

I personally created a few virtual user profiles and record different common interactions for each one. As you can see in the example, the basic options are fairly self explanatory. I do want to draw your attention to the user think time, which is a delay applied to simulate the time it takes a user to process the information on a page before starting another request.

For this test, I expect a user will wait between 6 and 30 seconds. Not setting this field will simulate processing at computer speeds and will quickly use up local processors, requiring additional load agents.

If your needs require a more detailed virtual user, the WAPT Pro tool does have the flexibility and power to manage nearly every case.

Load Agents

A load agent manages your virtual users and their interactions, turning your local computer from one user to up to 2,000 user sessions. Your local computer will have its own limitations depending on available resources and how the load test is set up.

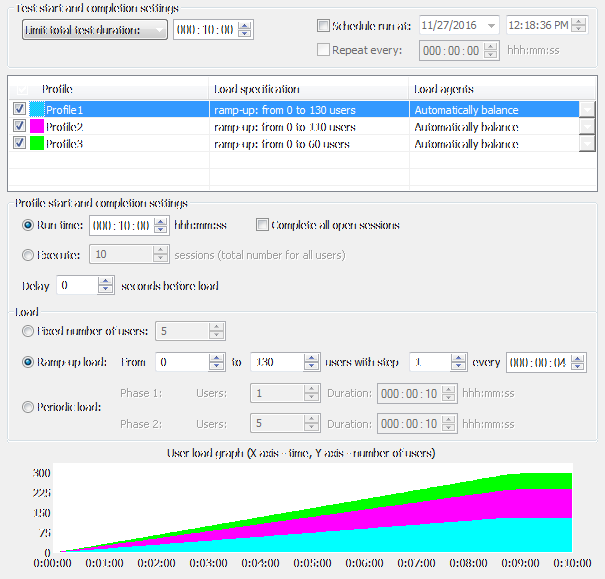

In this test, I am ramping up 3 user profiles to reach my 300 instantaneous peak. If you look at the graph, you can see that I will reach the 300 virtual users after 9 minutes and the final 1 minute will be at full load.

For anyone interested, each virtual user will be accessing a MySQL table with 200,000 records, sorting on different columns (both integers and strings), limiting records and retrieving pages containing 25 or 50 records. Both the load agent and server is on one box with a 6 core AMD FX-6300 at 3,500 MHz CPU, 8MB of memory and an SSD (solid state drive).

For large scale tests you will need the ability to set up load agents on multiple computers to share the virtual user load. WAPT Pro allows you to accomplish this distributed testing, supporting 2 load agents with 2,000 virtual users each out of the box. Should you need more than that, you can license as many load agents as your project requires.

The Path to Performance

For this example, the test is being run against a single server which is not configured to share resources within a data center so that we can see where scaling is needed.

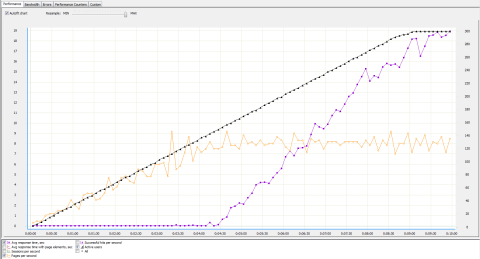

In our example results, the black line is the number of active virtual users, the yellow line is the pages per second and the purple line is the average response time in seconds.

Ideally, we want to see the response time (purple line) flat line across the bottom of the chart. At 140 active users the response time took a small hit of .5 seconds and then recovered. By the time we ramped up to 150 users, our response time was around 1 second and we can start to see the cumulative effect as traffic begins backing up. When we finally reach our full load of 300 users the response time is around 17 to 19 seconds.

While this graph gives us a decent overview, we have other report data like request errors and the capability to drill down further into the test to see what is happening with each user session at any given time, which will help us locate problem pages, bottlenecks, etc...

This test did not generate any request errors, so we had not reached critical mass and every user did eventually get their results, however 19 seconds is longer than most users will wait around for them.

Remember that we where also testing against instantaneous peak, so we can project that our site would recover fairly quickly, within a few minutes as long as the concurrent users in the pipeline where around our typical average of 67.

To be properly scaled, our web site would need at least twice the resources currently available to it to avoid any inconvenience to our visitors. This also gives us a good base for when we go into production by monitoring the growth and determining when we would need to deal with scalability before it becomes an issue.

Available Modules

While WAPT Pro works great right out of the box, there are additional modules which will improve testing if you work heavily with specific technologies.

ASP.NET - Web applications implemented in ASP.NET use several parameters to pass session-specific data between the client and the server. The Module for ASP.NET testing ensures that these parameters are always delivered correctly.

JSON - JSON format is often used for serializing and transmitting structured data between the client and server parts of a web application. If your application uses JSON, the Module for JSON format can provide several benefits for you.

Adobe Flash - Flash-based web applications created with help of Adobe Flex use a special method for client-server communication. It is based on sending AMF (Action Message Format) messages with binary data.

These messages are embedded into regular HTTP requests, so WAPT or WAPT Pro can record and replay such tests. However the original product cannot modify the content of an AMF message to insert any dynamically changing data same way it modifies the parameters of regular requests.

This problem is resolved by a special Module for Adobe Flash testing. It can be installed on the system where you run WAPT or the workplace component of WAPT Pro.

Silverlight - Silverlight applications use a special MSBin1 binary format to pass data between the client and server. These data structures are embedded into regular HTTP requests, so WAPT and WAPT Pro can record and replay such tests.

However the original product cannot modify the content of the passed structures to create data-driven tests. This problem is resolved by a special Module for Silverlight testing. It can be installed on the system where you run WAPT or the workplace component of WAPT Pro.

GWT - The client part of a GWT application is often implemented in such a way that it can send remote procedure calls (RPC) to the server part. Such calls are passed inside special HTTP requests containing serialized data structures that are used as function arguments.

The server replies with the function return value which can also be a complex data structure. This reply is provided in the corresponding HTTP response. Even though the client-server communication inside a GWT application is done in text format, it is usually hard to parameterize, because all session-specific values can be hidden inside complex structures representing Java classes of your application.

Binary - Proprietary HTTP-based protocols may use binary messages to pass data from clients to the server and vice versa. For example, such message can contain a serialized Java object.

The Module for Binary formats lets you work with any type of such messages. Binary bodies of HTTP requests are converted to the hexadecimal representation. You can edit it and insert variables containing hexadecimal code of session-specific binary values.

A special additional function is provided for the processing of binary server responses. It can extract binary values from them by the left and right boundaries and/or by the offset and length. The extracted value can be assigned to a WAPT variable in hexadecimal form or as a string of text.

SharePoint - MS SharePoint Web applications use a special "X-RequestDigest" header containing an important value also called the "form digest". The same value appears as a parameter of some POST requests.

This is a code that is inserted into each page by SharePoint and is used to validate the client requests. SharePoint uses this method as a form of security validation that helps prevent attacks with malicious data posting.

Conclusion

If your Web site project is expected to handle multiple concurrent requests, then you must develop with scalability in mind. While there are many facets to scalability, load testing is how you prove your concept in development instead of crossing your fingers and hoping everything works out in production.

Investing in the proper tools, like WAPT Pro, can not only save you time and money in the long run, it can save your reputation.

When dealing with clients, no news is usually good news. A crash that you are not able to scale to, is very bad news, and I am positive that everyone important to your success is going to hear about it. The better your tool simulates real user interactions the more useful and accurate your load test results will be.

You need to be a registered user or login to post a comment

1,616,872 PHP developers registered to the PHP Classes site.

Be One of Us!

Login Immediately with your account on:

Comments:

1. Developing for Web Server Scalability - Mike Thomson (2016-12-12 07:31)

Relevant to many servers not just PHP.... - 0 replies

Read the whole comment and replies